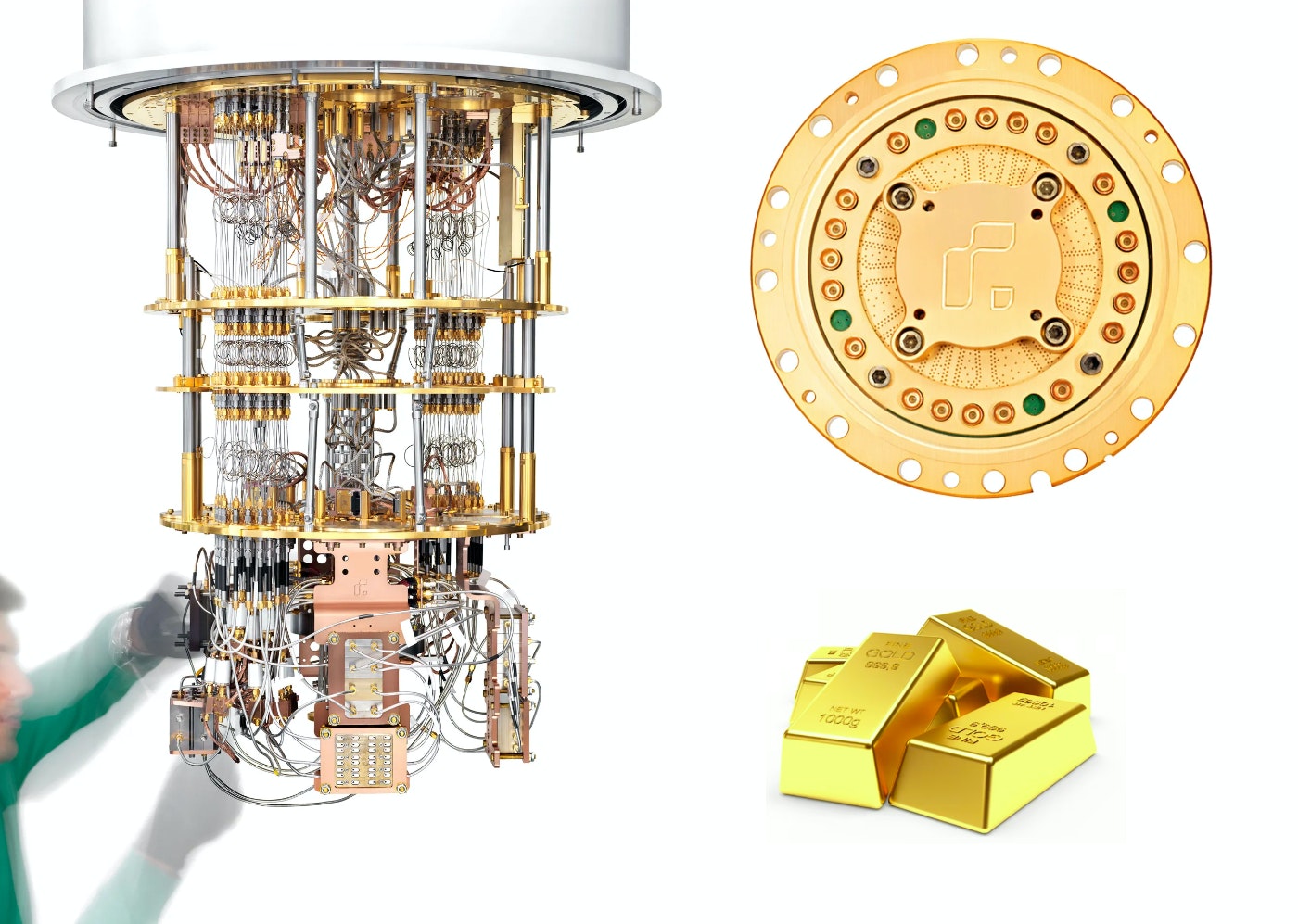

Quantum Computers are the most expensive technology on Earth. I suffice, that their success is a direct measure of how rich a technological civilization is.

With the riches measured in usable, carbon-neutralized energy. (If we don’t count the carbon now we shall still have to count it later).

Moore’s law will not work for quantum computers

This idea came to me after watching a clip from Lex Fridman’s podcast with the quantum physicist, applied mathematician and entrepreneur - Guillaume Verdon.

Classical computers were first invented in ancient times before Christ, to be honest. But for practical purposes they truly emerged on the global scene with the invention of the transistor in 1947 at Bell Labs. With the invention of the transistor emerged Moore’s law.

“Moore's law is the observation that the number of transistors in an integrated circuit (IC) doubles about every two years” – Wikipedia. This law was posited by Gordon Moore in 1965 and has been accurate since 1975 to date.

It is a law indeed. But for classical computers.

Why?

Just like artificial intelligence, a classical computer with a human user is a system trying to encode key details of entropic processes in the universe with as small an entropic model as possible.

To compute is to represent states in the universe (be they naturally occurring or occurring in abstract mathematical worlds or wherever) and run one of their process changes in a simulation.

The less energy we can use to do this, the better.

Smaller transistors use less energy and thankfully, Moore’s law has seen these basic units of computation, transistors, shrink tremendously from the light-bulb-sized clunky setup like the one below.

Source - https://en.wikipedia.org/wiki/Transistor#/media/File:Replica-of-first-transistor.jpg

To this highly magnified image of IBM’s 2-nanometer chip technology. It looks like an X-ray of some strange animal’s dental formula.

Source - https://time.com/collection/best-inventions-2022/6228819/ibm-two-nanometer-chip/

Each transistor in the above image is roughly the size of 5 atoms and the 50 billion transistors in the chip can fit on a fingernail.

All this miniaturization has been possible because we did not need a lot of energy or material to represent a bit of information, at the fundamental level. We did not know it 49 years ago when Gordon Moore postulated his law. We do now.

However, we need a lot of energy and materials to represent a logical qubit of information. And that is precisely why quantum computers will not scale according to Moore’s law.

As the number of qubits we need increases, the energy needed hence the cost of running these quantum computations will likely increase to match the growth of real, carbon-neutralized GDP for the entire planet.

But qubits are cold. What do you mean they need a lot of energy?

Theoretically, a quantum computation consumes less energy than a classical computation. Because the energy changes are reversible, we could, theoretically, run quantum computations on zero energy!

Because theoretically still, any energy we input is never output. For example, the fundamental logical gate in classical computations, called a NAND gate, looks as below.

NAND gate

You can see that 2 bits of information, A and B, go in, but only one bit of information goes out.

Since Landauer showed that information is energy, this shows that classical computers are wasteful of energy.

The quantum version of the NAND gate is called the Tofolli gate and is seen below.

Tofolli Gate

3 inputs of energy to give 3 outputs of energy.

No energy wasted.

But wait, you need 3 qubit inputs while in the classical case, you only needed 2 bits. Already, we can see we need more than is usual.

Simulating nature is materially and energetically very expensive

Needing more energy pulses for our inputs is not even a scratch on the surface of our energy budget when using quantum computers. Thing is, we are simulating nature using artificial nature, millions of times bigger than what we are simulating.

Take superconducting qubits. While natural qubits like “spinning” electrons are extremely tiny things small enough to survive their quantum states from being hit by most cosmic rays, superconducting qubits behave like artificial spinning electrons we can easily control. The downside is that millions of cosmic rays see them very clearly. And they hit them.

That is just one form of external noise. It costs us and it is going to keep costing us.

We need and shall keep needing a lot of energy to keep energetic noise like cosmic rays out of our quantum systems.

As Mr. Guillaume explains, the trick up our sleeve is to build error-correcting codes of codes of codes of codes of the noisy system, which eventually gives us a noise-less system (in the average case). But this makes the system bigger hence a bigger budget for the cool down.

Heat is the second form of external noise. Should have been the first one we talk about. Taking the analogy of the spinning electron again, sitted comfortably in its orbit, infrared signals carrying heat rarely bump the electron enough to interrupt its spin.

The electron is electrostatically bound to the nucleus and if that electron is sharing the orbit with another electron, the two are essentially entangled and again, harder to bother with a spin-out of their stable configurations.

Thirdly, why we suffer so much in energetic costs is because it takes a very huge amount of energy to keep track of quantum states with our measurements.

Nature doesn’t do measurements, we do. We are thus adding information (a measurement) to our simulation of nature. Hence more energy (remember Landauer).

Measurement also introduces unnecessary energy into a quantum system which affects the position and momentum of our particles a la Heisenberg’s uncertainty principle. It also doesn’t help that collapsing the wave function of a superposed quantum state into 1 of many states, albeit randomly, gives us exactly zero information about the hidden variables of that state.

This is true randomness, not what classical computers mimic which is pseudo randomness. Our classical randomization algorithms are all pseudorandom.

Einstein complained about this.

Therefore we usually have to make multiple repeated measurements to figure out the probability distribution of our quantum states, be it to learn how to nudge them or mitigate errors. These repeated initializations plus measurements are called “shots” and we normally need them in the thousands. They all consume a lot of energy. Hence money.

We need a bigger budget

Here’s a law for you,

“If we check every 2 years for the next 2 decades, qubits will keep being more expensive to run for computation than transistors”

The funding is going exponential but the best we can show for it so far is 433 qubits. However, we cannot afford to slow down, we are close to a great use-case.

If we keep this enthusiasm up, we shall get somewhere. More money equals more qubits.

For now, progress in quantum computers shall keep growing to match increasing funding to quantum computation but with zero profits, and it will not break even in the same way classical computing has broken even. You know, with some sort of Moore’s law for qubits.

Certainly, there are learning curves that take us from poor qubits to better qubits and this curve reduces energy costs hence the budgetary constraints. But at the lowest limit, running perfect simulations of natural models is going to cost us big time and will never be cheaper than classical computation en masse.

Classical computers might be approximations of nature, but they show us that we can go very far with approximating nature.

However, since we need to sometimes take a critical look at reality if only to break out of approximations that are hitting their limit, then we need to be able to pay for it. Big quantum computational systems like LIGO cost $1.1 billion but it has to be paid if you want to look at the clear night sky and see gravitational waves. No classical computational systems, no matter how cleverly built, will be able to encode everything that is going on in LIGO.

The E/acc movement is right, humanity needs to reach for more rungs up the ladder of the Kardashev scale. We need to be able to consume hundreds of times more usable, carbon-free energy than we use today. This energy is what truly pays for everything including quantum computations and while the technology may not be good enough for making profitable desktop-quantum-PCs, quantum computers will, once every while, give us a view of reality we did not expect. A view that re-ignites our interest in the world.

Quantum Computers help nudge humanity and its classical computational approximators ever closer to truer greatness than if we had never discovered the power that is simulating nature with a quantum computational model.

Even though it might be the most expensive technology on earth.

***

P.S. >> Because running quantum computations is so expensive our fears of futuristic quantum computers hacking our email are unfounded. Social hacking will remain a cheaper way to hack your email, quantum computers, not so much. And if your password is PASSWORD please do not blame quantum computers.