In this video, we're diving deep into the groundbreaking technology of MVDream, a game-changing AI model that's redefining what's possible in the realm of 3D modeling.

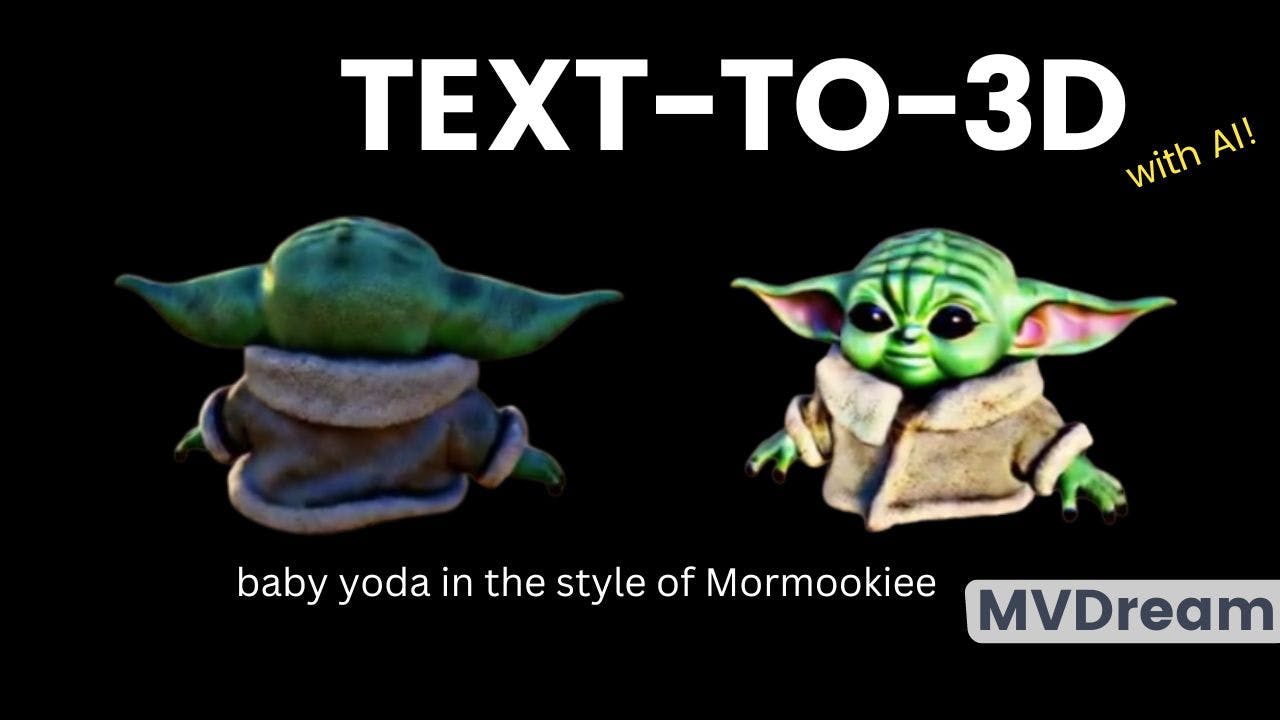

Before we jump right on this exploration, let's set the stage. Text-to-3D is a cutting-edge field where AI takes ordinary text descriptions and transforms them into astonishing 3D objects, bridging the imaginative power of words with the visual impact of 3D modeling. It's like bringing your wildest ideas to life in a tangible form (not just images!), and MVDream is leading the way in this transformative arena.

Artificial Intelligence (AI) has been making giant strides in recent years, from generating text to crafting lifelike images and even

. But the real challenge lies in turning simple textual descriptions into intricate 3D models, complete with all the fine details and realistic features that our world is composed of. That's where MVDream steps in, not as just another initial step but as a monumental leap forward in 3D model generation from text.

In this video, we'll unveil the astounding capabilities of MVDream. You'll witness how this AI understands the physics of 3D modeling like never before, creating high-quality, realistic 3D objects from nothing more than a short sentence. We'll showcase its prowess in comparison with other approaches, highlighting its ability to produce spatially coherent, real-world objects, free from the quirks of past models.

But how does MVDream work its magic? We'll delve into the inner workings of this AI powerhouse, exploring its architecture and the ingenious techniques it employs. You'll discover its evolution from a 2D image diffusion model to a multi-view diffusion model, enabling it to generate multiple views of an object and, most importantly, to ensure the consistency of the 3D models it creates.

Of course, no technology is without its limitations, and MVDream is no exception. We'll also discuss its current constraints, including resolution and dataset size. These are crucial factors that impact the applicability of this impressive technology.

So, join us on this exciting journey as we unravel the mysteries behind MVDream, a model that's rewriting the rules of 3D modeling, and explore the incredible possibilities it offers. If you're curious about the intersection of AI, 3D modeling, and the future of creativity, this video is a must-watch. Let's dive right in!

foreign

I'm super excited to share this new AI

model with you we've seen so many new

approaches to generating text then

generating images only getting better

after that we've seen other amazing

initial works for generating videos and

even 3D models out of text just imagine

the complexity of such a task when all

you have is a sentence and you need to

generate something that could look like

a real object in our real world with all

its details well here's a new model that

is not merely an initial step it's a

huge step forward in 3D model generation

from just text mvdream as you can see it

seems like mvdream is able to understand

physics compared to previous approaches

in jetset it knows that the view should

be realistic with only two ears and not

two for any possible views it ends up

creating a very high quality 3D model

out of just this simple line of text how

cool is this but what's even cooler is

how it works so let's dive into it but

before doing so let me introduce a super

cool company answering the video with

another application of artificial

intelligence voice synthesis introducing

kit.ai a platform for artists producers

and fans to create AI voice models with

ease and even create monetizable work

with licensed AI voice models of your

favorite artists kids that AI offers a

library of licensed artist voices and

royalty-free library and a community

library with voice models of characters

and celebrities created by the users you

can even train your own voice with one

click simply provide audio files of the

voice you want to replicate and kids

that AI will create an AI voice model

for you to use with no back-end

knowledge required generate voice model

conversion by providing a nakapella file

recording audio manually or even

inputting a YouTube link for easy vocal

separation and that's pretty cool since

I can do it pretty easily get started

with kits.ai using the first link in the

description right now

now let's get back to the 3D World if

you look at a 3D model the biggest

challenge is that they need to generate

both realistic and high quality images

for each view from where you are looking

at it and those views have to be

spatially coherent with each other not

like the four eared Yoda we previously

saw are multi-phase subjects we see

since we rarely have people from the

back in any image data set so the model

kind of wants to see faces out because

one of the main approaches to generating

3D models is to simulate a view angle

from a camera and then generate what it

should be seeing from this Viewpoint

this is called 2D lifting since we

generate regular images to combine them

into a full 3d scene then we generate

all possible views from around the

object that is why we are used to seeing

weird artifacts like these since the

model is just trying to generate one

View at a time and doesn't understand

the overall object well enough in the 3D

space well MP dream made a huge step in

this Direction They tackled what we call

the 3D consistency problem and even

claimed to have solved it using a

technique called score distillation

sampling introduced by dream Fusion

another text to 3D method that was

published in late 2022 which I covered

on the channel by the way if you enjoyed

this video and these kinds of new

technologies you should definitely

subscribe I cover new approaches like

this one every week on the channel

before entering into the score

distribution sampling Technique we need

to know about the architecture they are

using in short it's yet just another 2D

image diffusion model like Dali

mid-journey or stable diffusion more

specifically they started with a

pre-trained dreambooth Model A powerful

open source model to generate images

based on stable diffusion that I already

covered on the channel then the change

they made was to render a set of

multi-view images directly instead of

only one image thanks to being trained

on a 3D data set of various objects here

we take multiple views from the 3D

object that we have in our data set and

use them to train the model to generate

them backward this is done by changing

the self-attention block we see here in

blue for a 3D one meaning that we simply

add a dimension to reconstruct multiple

images at a time instead of one below

you can see the camera and time step

that is also being inputted into the

model for each view to help the model

understand where which image is going

and what kind of view needs to be

generated now all the images are

connected and generated together so they

can share information and better

understand the global content then you

feed it your text and train the model to

reconstruct the objects from a data set

accurately this is where they apply

their multi-view score distillation

sampling process I mentioned they now

have a multi-view diffusion model which

can generate well multiple views of an

object but they needed to reconstruct

consistent 3D models not just views so

this is often done using Nerf or neural

Radiance Fields as it is done with trim

Fusion which we mentioned earlier it

basically uses the trained multi-view

diffusion model that we have and freezes

it meaning that it is just being used

and not being trained we start

generating an initial image version

Guided by our caption and initial

rendering with added noise using our

multi-view diffusion model we add noise

so that the model knows it needs to

generate a different version of the

image while still receiving context for

it then we use the model to generate a

higher quality image add the image used

to generate it and remove the Noise We

manually added to use this result to

guide and improve our Nerf model for the

next step we do all that to better

understand where in the image the Nerf

model should focus its attention to

produce better results in the next step

and we repeat that until the 3D model is

satisfying enough and voila this is how

they took a 2d text to image model

adapted it for multiple view synthesis

and finally used it to create a text to

3D version of the model iteratively of

course they added many technical

improvements to the approaches they

based themselves on which I did not

enter into for Simplicity but if you are

curious I definitely invite you to read

their great paper for more information

they are also still some limitations

with this new approach mainly that the

generations are only of 256 by 256

pixels which is quite low resolution

even though the results look incredible

they also mentioned that the size of the

data set for this task is definitely a

limitation for the generalizability of

the approach this was an overview of

Envy dream and thank you for watching I

will see you next time with another

amazing paper

thank you

foreign

[Music]

References:

►Read the full article: https://www.louisbouchard.ai/mvdream/

►Shi et al., 2023: MVDream, https://arxiv.org/abs/2308.16512

►Project with more examples: https://mv-dream.github.io/

►Code (to come): https://github.com/MV-Dream/MVDream

►Twitter: https://twitter.com/Whats_AI

►My Newsletter (A new AI application explained weekly to your emails!): https://www.louisbouchard.ai/newsletter/

►Support me on Patreon: https://www.patreon.com/whatsai

►Join Our AI Discord: https://discord.gg/learnaitogether