Authors:

(1) Ruohan Zhang, Department of Computer Science, Stanford University, Institute for Human-Centered AI (HAI), Stanford University & Equally contributed; [email protected];

(2) Sharon Lee, Department of Computer Science, Stanford University & Equally contributed; [email protected];

(3) Minjune Hwang, Department of Computer Science, Stanford University & Equally contributed; [email protected];

(4) Ayano Hiranaka, Department of Mechanical Engineering, Stanford University & Equally contributed; [email protected];

(5) Chen Wang, Department of Computer Science, Stanford University;

(6) Wensi Ai, Department of Computer Science, Stanford University;

(7) Jin Jie Ryan Tan, Department of Computer Science, Stanford University;

(8) Shreya Gupta, Department of Computer Science, Stanford University;

(9) Yilun Hao, Department of Computer Science, Stanford University;

(10) Ruohan Gao, Department of Computer Science, Stanford University;

(11) Anthony Norcia, Department of Psychology, Stanford University

(12) Li Fei-Fei, 1Department of Computer Science, Stanford University & Institute for Human-Centered AI (HAI), Stanford University;

(13) Jiajun Wu, Department of Computer Science, Stanford University & Institute for Human-Centered AI (HAI), Stanford University.

Table of Links

Brain-Robot Interface (BRI): Background

Conclusion, Limitations, and Ethical Concerns

Appendix 1: Questions and Answers about NOIR

Appendix 2: Comparison between Different Brain Recording Devices

Appendix 5: Experimental Procedure

Appendix 6: Decoding Algorithms Details

Appendix 7: Robot Learning Algorithm Details

Abstract

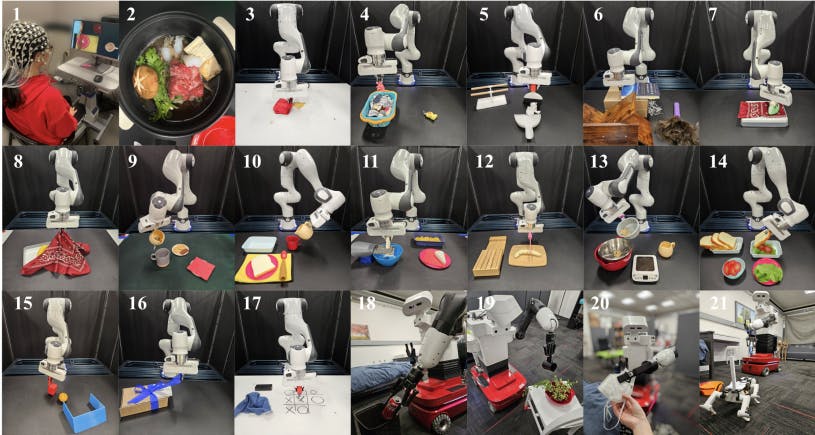

We present Neural Signal Operated Intelligent Robots (NOIR), a general-purpose, intelligent brain-robot interface system that enables humans to command robots to perform everyday activities through brain signals. Through this interface, humans communicate their intended objects of interest and actions to the robots using electroencephalography (EEG). Our novel system demonstrates success in an expansive array of 20 challenging, everyday household activities, including cooking, cleaning, personal care, and entertainment. The effectiveness of the system is improved by its synergistic integration of robot learning algorithms, allowing for NOIR to adapt to individual users and predict their intentions. Our work enhances the way humans interact with robots, replacing traditional channels of interaction with direct, neural communication. Project website: https://noir-corl.github.io/

Keywords: Brain-Robot Interface; Human-Robot Interaction

Figure 1: NOIR is a general-purpose brain-robot interface that allows humans to use their brain signals (1) to control robots to perform daily activities, such as making Sukiyaki (2), ironing clothes (7), playing Tic-Tac-Toe with friends (17), and petting a robot dog (21).

1 Introduction

Brain-robot interfaces (BRIs) are a pinnacle achievement in the realm of art, science, and engineering. This aspiration, which features prominently in speculative fiction, innovative artwork, and groundbreaking scientific studies, entails creating robotic systems that operate in perfect synergy with humans. A critical component of such systems is their ability to communicate with humans. In human-robot collaboration and robot learning, humans communicate their intents through actions [1], button presses [2, 3], gaze [4–7], facial expression [8], language [9, 10], etc [11, 12]. However, the prospect of direct communication through neural signals stands out to be the most thrilling but challenging medium.

We present Neural Signal Operated Intelligent Robots (NOIR), a general-purpose, intelligent BRI system with non-invasive electroencephalography (EEG). The primary principle of this system is hierarchical shared autonomy, where humans define high-level goals while the robot actualizes the goals through the execution of low-level motor commands. Taking advantage of the progress in neuroscience, robotics, and machine learning, our system distinguishes itself by extending beyond previous attempts to make the following contributions.

First, NOIR is general-purpose in its diversity of tasks and accessibility. We show that humans can accomplish an expansive array of 20 daily everyday activities, in contrast to existing BRI systems that are typically specialized at one or a few tasks or exist solely in simulation [13–22]. Additionally, the system can be used by the general population, with a minimum amount of training.

Second, the “I” in NOIR means that our robots are intelligent and adaptive. The robots are equipped with a library of diverse skills, allowing them to perform low-level actions without dense human supervision. Human behavioral goals can naturally be communicated, interpreted, and executed by the robots with parameterized primitive skills, such as Pick(obj-A) or MoveTo(x,y). Additionally, our robots are capable of learning human intended goals during their collaboration. We show that by leveraging the recent progress in foundation models, we can make such a system more adaptive with limited data. We show that this can significantly increase the efficiency of the system.

The key technical contributions of NOIR include a modular neural signal decoding pipeline for human intentions. Decoding human intended goals (e.g., “pick up the mug from the handle”) from neural signals is extremely challenging. We decompose human intention into three components: What object to manipulate, How to interact with the object, and Where to interact, and show that such signals can be decoded from different types of neural data. These decomposed signals naturally correspond to parameterized robot skills and can be communicated effectively to the robots.

In 20 household activities involving tabletop or mobile manipulations, three human subjects successfully used our system to accomplish these tasks with their brain signals. We demonstrate that few-shot robot learning from humans can significantly improve the efficiency of our system. This approach to building intelligent robotic systems, which utilizes human brain signals for collaboration, holds immense potential for the development of critical assistive technologies for individuals with or without disabilities and to improve the quality of their life.

This paper is available on arxiv under CC 4.0 license.